CRUD REST API with Node.js, Express, and PostgreSQL – LogRocket Blog

Editor’s note: This post was updated on 06 June 2022 to reflect updates to the pgAdmin client.

For a modern web developer, knowing how to work with APIs to facilitate communication between software systems is paramount. In this tutorial, we’ll learn how to create a CRUD RESTful API in a Node.js environment that runs on an Express server and uses a PostgreSQL database. We’ll also walk through connecting an Express server with PostgreSQL using node-postgres.

Our API will be able to handle the HTTP request methods that correspond to the PostgreSQL database from which the API gets its data. You’ll also learn how to install PostgreSQL and work with it through the command-line interface.

Our goal is to allow CRUD operations, GET, POST, PUT, and DELETE, on the API, which will run the corresponding database commands. To do so, we’ll set up a route for each endpoint and a function for each query.

We’ll cover the following in detail:

To follow along with this tutorial, you‘ll need:

- Familiarity with the JavaScript syntax and fundamentals

- Basic knowledge of working with the command line

- Node.js and npm installed

The complete code for the tutorial is available on this GitHub repo. Let’s get started!

Nội Dung Chính

What is a RESTful API?

Representational State Transfer (REST) defines a set of standards for web services. An API is an interface that software programs use to communicate with each other. Therefore, a RESTful API is an API that conforms to the REST architectural style and constraints. REST systems are stateless, scalable, cacheable, and have a uniform interface.

What is a CRUD API?

When building an API, you want your model to provide four basic functionalities. It should be able to create, read, update, and delete resources. This set of essential operations is commonly referred to as CRUD.

RESTful APIs most commonly utilize HTTP requests. Four of the most common HTTP methods in a REST environment are GET, POST, PUT, and DELETE, which are the methods by which a developer can create a CRUD system.

Create: Use theHTTP POSTmethod to create a resource in a REST environmentRead: Use theGETmethod to read a resource, retrieving data without altering itUpdate: Use thePUTmethod to update a resourceDelete: Use theDELETEmethod to remove a resource from the system

What is Express?

According to the official Express documentation, Express is a fast, un-opinionated, minimalist web framework for Node.js. Express is one of the most popular frameworks for Node.js. In fact, the E in MERN, MEVN, and MEAN stack stands for Express.

Although Express is minimalist, it is also very flexible, leading to the development of various Express middlewares that you can use to address almost any task or problem imaginable.

What is PostgreSQL?

PostgreSQL, commonly referred to as Postgres, is a free, open source relational database management system. You might be familiar with a few other similar database systems, like MySQL, Microsoft SQL Server, or MariaDB, which compete with PostgreSQL.

PostgreSQL is a robust relational database that has been around since 1997 and is available on all major operating systems, Linux, Windows, and macOS. Since PostgreSQL is known for stability, extensibility, and standards compliance, it’s a popular choice for developers and companies.

It’s also possible to create a Node.js RESTful CRUD API using Sequelize. Sequelize is a promise-based Node.js ORM for for Postgres, MySQL, MariaDB, SQLite, and Microsoft SQL Server.

For more on how to use Sequelize in a Node.js REST API, check out the video tutorial below:

What is node-postgres?

node-postgres, or pg, is a nonblocking PostgreSQL client for Node.js. Essentially, node-postgres is a collection of Node.js modules for interfacing with a PostgreSQL database.

Among the many features node-postgres supports include callbacks, promises, async/await, connection pooling, prepared statements, cursors, rich type parsing, and C/C++ bindings.

Creating a PostgreSQL database

We’ll begin this tutorial by installing PostgreSQL, creating a new user, creating a database, and initializing a table with a schema and some data.

Installation

If you’re using Windows, download a Windows installer of PostgreSQL.

If you’re using a Mac, this tutorial assumes you have Homebrew installed on your computer as a package manager for installing new programs. If you don’t, simply click on the link and follow the instructions.

Open up the Terminal and install postgresql with brew:

brew install postgresql

You may see instructions on the web reading brew install postgres instead of postgresql; both options will install PostgreSQL on your computer.

After the installation is complete, we’ll want to get postgresql up and running, which we can do with services start:

brew services start postgresql==> Successfully started `postgresql` (label: homebrew.mxcl.postgresql)

If at any point you want to stop the postgresql service, you can run brew services stop postgresql.

With PostgreSQL installed, next, we’ll connect to the postgres command line where we can run SQL commands.

PostgreSQL command prompt

psql is the PostgreSQL interactive terminal. Running psql will connect you to a PostgreSQL host. Running psql --help will give you more information about the available options for connecting with psql:

-h:--host=HOSTNAME: The database server host or socket directory; the default islocal socket-p:--port=PORT: The database server port; the default is5432-U:--username=USERNAME: The database username; the default isyour_username-w:--no-password: Never prompt for password-W:--password: Force password prompt, which should happen automatically

We’ll connect to the default postgres database with the default login information and no option flags:

psql postgres

You’ll see that we’ve entered into a new connection. We’re now inside psql in the postgres database. The prompt ends with a # to denote that we’re logged in as the superuser, or root:

postgres=#

Commands within psql start with a backslash \. To test our first command, we can check what database, user, and port we’ve connected to using the \conninfo command.

postgres=# \conninfoYou are connected to database "postgres" as user "your_username" via socket in "/tmp" at port "5432".

The reference table below includes a few common commands that we’ll use throughout this tutorial:

\q: Exitpsqlconnection\c: Connect to a new database\dt: List all tables\du: List all roles\list: List databases

Let’s create a new database and user so we’re not using the default accounts, which have superuser privileges.

Creating a role in Postgres

First, we’ll create a role called me and give it a password of password. A role can function as a user or a group. In this case, we’ll use it as a user:

postgres=# CREATE ROLE me WITH LOGIN PASSWORD 'password';

We want me to be able to create a database:

postgres=# ALTER ROLE me CREATEDB;

You can run \du to list all roles and users:

me | Create DB | {}postgres | Superuser, Create role, Create DB | {}Now, we want to create a database from the me user. Exit from the default session with \q for quit:

postgres=# \q

We’re back in our computer’s default terminal connection. Now, we’ll connect postgres with me:

psql -d postgres -U me

Instead of postgres=#, our prompt now shows postgres=> , meaning we’re no longer logged in as a superuser.

Creating a database in Postgres

We can create a database with the SQL command as follows:

postgres=> CREATE DATABASE api;

Use the \list command to see the available databases:

Name | Owner | Encoding | Collate | Ctype |api | me | UTF8 | en_US.UTF-8 | en_US.UTF-8 |

Let’s connect to the new api database with me using the \c connect command:

postgres=> \c apiYou are now connected to database "api" as user "me".api=>

Our prompt now shows that we’re connected to api.

Creating a table in Postgres

Finally, in the psql command prompt, we’ll create a table called users with three fields, two VARCHAR types, and an auto-incrementing PRIMARY KEY ID:

api=>CREATE TABLE users ( ID SERIAL PRIMARY KEY, name VARCHAR(30), email VARCHAR(30));

Make sure not to use the backtick ` character when creating and working with tables in PostgreSQL. While backticks are allowed in MySQL, they’re not valid in PostgreSQL. Also ensure that you do not have a trailing comma in the CREATE TABLE command.

Let’s add some data to work with by adding two entries to users:

Let’s make sure that the information above was correctly added by getting all entries in users:

api=> SELECT * FROM users;id | name | email ----+--------+-------------------- 1 | Jerry | [email protected] 2 | George | [email protected]

Now, we have a user, database, table, and some data. We can begin building our Node.js RESTful API to connect to this data, stored in a PostgreSQL database.

At this point, we’re finished with all of our PostgreSQL tasks, and we can begin setting up our Node.js app and Express server.

Setting up an Express server

To set up a Node.js app and Express server, first create a directory for the project to live in:

mkdir node-api-postgrescd node-api-postgres

You can either run npm init -y to create a package.json file, or copy the code below into a package.json file:

{ "name": "node-api-postgres", "version": "1.0.0", "description": "RESTful API with Node.js, Express, and PostgreSQL", "main": "index.js", "license": "MIT"}We’ll want to install Express for the server and node-postgres to connect to PostgreSQL:

npm i express pg

Now, we have our dependencies loaded into node_modules and package.json.

Create an index.js file, which we’ll use as the entry point for our server. At the top, we’ll require the express module, the built-in body-parser middleware, and we’ll set our app and port variables:

const express = require('express')const bodyParser = require('body-parser')const app = express()const port = 3000app.use(bodyParser.json())app.use( bodyParser.urlencoded({ extended: true, }))We’ll tell a route to look for a GET request on the root / URL and return some JSON:

app.get('/', (request, response) => { response.json({ info: 'Node.js, Express, and Postgres API' })})Now, set the app to listen on the port you set:

app.listen(port, () => { console.log(`App running on port ${port}.`)})From the command line, we can start the server by hitting index.js:

node index.jsApp running on port 3000.

Go to http://localhost:3000 in the URL bar of your browser, and you’ll see the JSON we set earlier:

{ info: "Node.js, Express, and Postgres API"}The Express server is running now, but it’s only sending some static JSON data that we created. The next step is to connect to PostgreSQL from Node.js to be able to make dynamic queries.

Connecting to a Postgres database using a Client

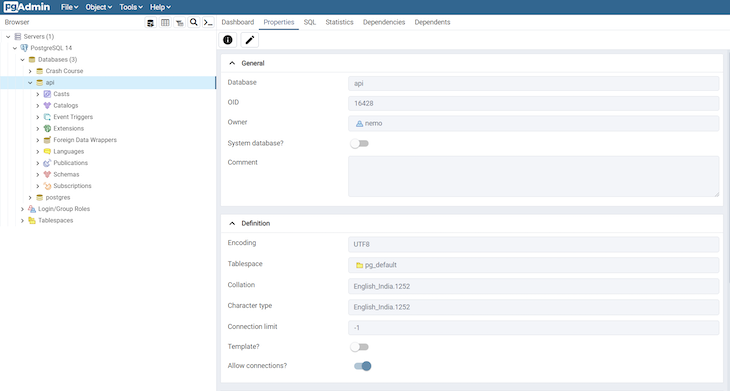

A popular client for accessing Postgres databases is the pgAdmin client. The pgAdmin application is available for various platforms. If you want to have a graphical user interface for your Postgres databases, you can go to the download page and download the necessary package.

Creating and querying your database using pgAdmin is simple. You need to click on the Object option available on the top menu, select Create, and choose Database to create a new connection. All the databases are available on the side menu. You can query or run SQL queries efficiently by selecting the proper database:

Connecting to a Postgres database from Node.js

We’ll use the node-postgres module to create a pool of connections. Therefore, we don’t have to open and close a client each time we make a query.

A popular option for production pooling would be to use pgBouncer, a lightweight connection pooler for PostgreSQL.

Create a file called queries.js and set up the configuration of your PostgreSQL connection:

const Pool = require('pg').Poolconst pool = new Pool({ user: 'me', host: 'localhost', database: 'api', password: 'password', port: 5432,})In a production environment, you would want to put your configuration details in a separate file with restrictive permissions so that it is not accessible from version control. But, for the simplicity of this tutorial, we’ll keep it in the same file as the queries.

The aim of this tutorial is to allow CRUD operations, GET, POST, PUT, and DELETE on the API, which will run the corresponding database commands. To do so, we’ll set up a route for each endpoint and a function corresponding to each query.

Creating routes for CRUD operations

We’ll create six functions for six routes, as shown below. First, create all the functions for each route. Then, export the functions so they’re accessible:

GET:/|displayHome()GET:/users|getUsers()GET:/users/:id|getUserById()POST:/users|createUser()PUT:/users/:id|updateUser()DELETE:/users/:id|deleteUser()

In index.js, we made an app.get() for the root endpoint with a function in it. Now, in queries.js, we’ll create endpoints that will display all users, display a single user, create a new user, update an existing user, and delete a user.

GET all users

Our first endpoint will be a GET request. We can put the raw SQL that will touch the api database inside the pool.query(). We’ll SELECT all users and order by ID.

const getUsers = (request, response) => { pool.query('SELECT * FROM users ORDER BY id ASC', (error, results) => { if (error) { throw error } response.status(200).json(results.rows) })}GET a single user by ID

For our /users/:id request, we’ll get the custom id parameter by the URL and use a WHERE clause to display the result.

In the SQL query, we’re looking for id=$1. In this instance, $1 is a numbered placeholder that PostgreSQL uses natively instead of the ? placeholder that you may recognize from other variations of SQL:

const getUserById = (request, response) => { const id = parseInt(request.params.id) pool.query('SELECT * FROM users WHERE id = $1', [id], (error, results) => { if (error) { throw error } response.status(200).json(results.rows) })}POST a new user

The API will take a GET and POST request to the /users endpoint. In the POST request, we’ll add a new user. In this function, we’re extracting the name and email properties from the request body and inserting the values with INSERT:

const createUser = (request, response) => { const { name, email } = request.body pool.query('INSERT INTO users (name, email) VALUES ($1, $2) RETURNING *', [name, email], (error, results) => { if (error) { throw error } response.status(201).send(`User added with ID: ${results.rows[0].id}`) })}PUT updated data in an existing user

The /users/:id endpoint will also take two HTTP requests, the GET we created for getUserById and a PUT to modify an existing user. For this query, we’ll combine what we learned in GET and POST to use the UPDATE clause.

It’s worth noting that PUT is idempotent, meaning the exact same call can be made over and over and will produce the same result. PUT is different than POST, in which the exact same call repeated will continuously make new users with the same data:

const updateUser = (request, response) => { const id = parseInt(request.params.id) const { name, email } = request.body pool.query( 'UPDATE users SET name = $1, email = $2 WHERE id = $3', [name, email, id], (error, results) => { if (error) { throw error } response.status(200).send(`User modified with ID: ${id}`) } )}DELETE a user

Finally, we’ll use the DELETE clause on /users/:id to delete a specific user by ID. This call is very similar to our getUserById() function:

const deleteUser = (request, response) => { const id = parseInt(request.params.id) pool.query('DELETE FROM users WHERE id = $1', [id], (error, results) => { if (error) { throw error } response.status(200).send(`User deleted with ID: ${id}`) })}Exporting CRUD functions in a REST API

To access these functions from index.js, we’ll need to export them. We can do so with module.exports, creating an object of functions. Since we’re using the ES6 syntax, we can write getUsers instead of getUsers:getUsers and so on:

module.exports = { getUsers, getUserById, createUser, updateUser, deleteUser,}Our complete queries.js file is below:

const Pool = require('pg').Poolconst pool = new Pool({ user: 'me', host: 'localhost', database: 'api', password: 'password', port: 5432,})const getUsers = (request, response) => { pool.query('SELECT * FROM users ORDER BY id ASC', (error, results) => { if (error) { throw error } response.status(200).json(results.rows) })}const getUserById = (request, response) => { const id = parseInt(request.params.id) pool.query('SELECT * FROM users WHERE id = $1', [id], (error, results) => { if (error) { throw error } response.status(200).json(results.rows) })}const createUser = (request, response) => { const { name, email } = request.body pool.query('INSERT INTO users (name, email) VALUES ($1, $2)', [name, email], (error, results) => { if (error) { throw error } response.status(201).send(`User added with ID: ${results.insertId}`) })}const updateUser = (request, response) => { const id = parseInt(request.params.id) const { name, email } = request.body pool.query( 'UPDATE users SET name = $1, email = $2 WHERE id = $3', [name, email, id], (error, results) => { if (error) { throw error } response.status(200).send(`User modified with ID: ${id}`) } )}const deleteUser = (request, response) => { const id = parseInt(request.params.id) pool.query('DELETE FROM users WHERE id = $1', [id], (error, results) => { if (error) { throw error } response.status(200).send(`User deleted with ID: ${id}`) })}module.exports = { getUsers, getUserById, createUser, updateUser, deleteUser,}Setting up CRUD functions in a REST API

Now that we have all of our queries, we need to pull them into the index.js file and make endpoint routes for all the query functions we created.

To get all the exported functions from queries.js, we’ll require the file and assign it to a variable:

const db = require('./queries')Now, for each endpoint, we’ll set the HTTP request method, the endpoint URL path, and the relevant function:

app.get('/users', db.getUsers)app.get('/users/:id', db.getUserById)app.post('/users', db.createUser)app.put('/users/:id', db.updateUser)app.delete('/users/:id', db.deleteUser)Below is our complete index.js file, the entry point of the API server:

const express = require('express')const bodyParser = require('body-parser')const app = express()const db = require('./queries')const port = 3000app.use(bodyParser.json())app.use( bodyParser.urlencoded({ extended: true, }))app.get('/', (request, response) => { response.json({ info: 'Node.js, Express, and Postgres API' })})app.get('/users', db.getUsers)app.get('/users/:id', db.getUserById)app.post('/users', db.createUser)app.put('/users/:id', db.updateUser)app.delete('/users/:id', db.deleteUser)app.listen(port, () => { console.log(`App running on port ${port}.`)})With just these two files, we have a server, database, and our API all set up. You can start up the server by hitting index.js again:

node index.jsApp running on port 3000.

Now, if you go to http://localhost:3000/users or http://localhost:3000/users/1, you’ll see the JSON response of the two GET requests.

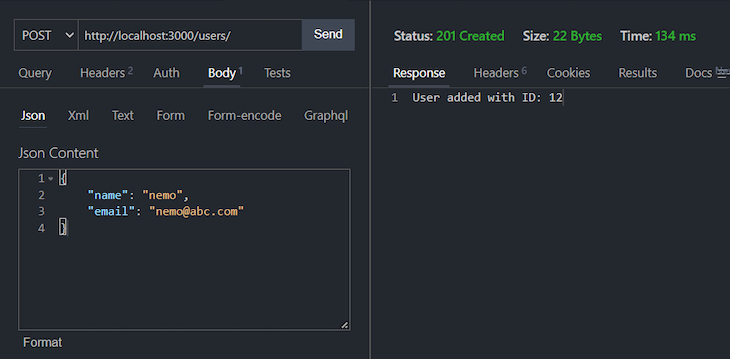

To test our POST, PUT, and DELETE requests, we can use a tool like Postman or a VS Code extension like Thunder Client to send the HTTP requests. You can also use curl, a command-line tool that is already available on your terminal.

Using a Postman or Thunder Client tool makes it simple to query endpoints with different HTTP methods. Simply enter your URL, choose the specific HTTP method, insert JSON value if the endpoint is a PUT or POST route, and hit send:

The example above shows sending a POST request to the specified route. The POST option suggests that it is a POST request. The URL beside the method is the API endpoint, and the JSON content is the data to be sent to the endpoint. You can hit the different routes similarly.

Conclusion

You should now have a functioning API server that runs on Node.js and is hooked up to an active PostgreSQL database. In this tutorial, we learned how to install and set up PostgreSQL in the command line, create users, databases, and tables, and run SQL commands. We also learned how to create an Express server that can handle multiple HTTP methods and use the pg module to connect to PostgreSQL from Node.js.

With this knowledge, you should be able to build on this API and utilize it for your own personal or professional development projects.